In 2015, we were delighted to receive a Catalyst Grant from the O’Brien Institute of Public Health in support of development of various aspects of OpenLabyrinth as an educational research platform.

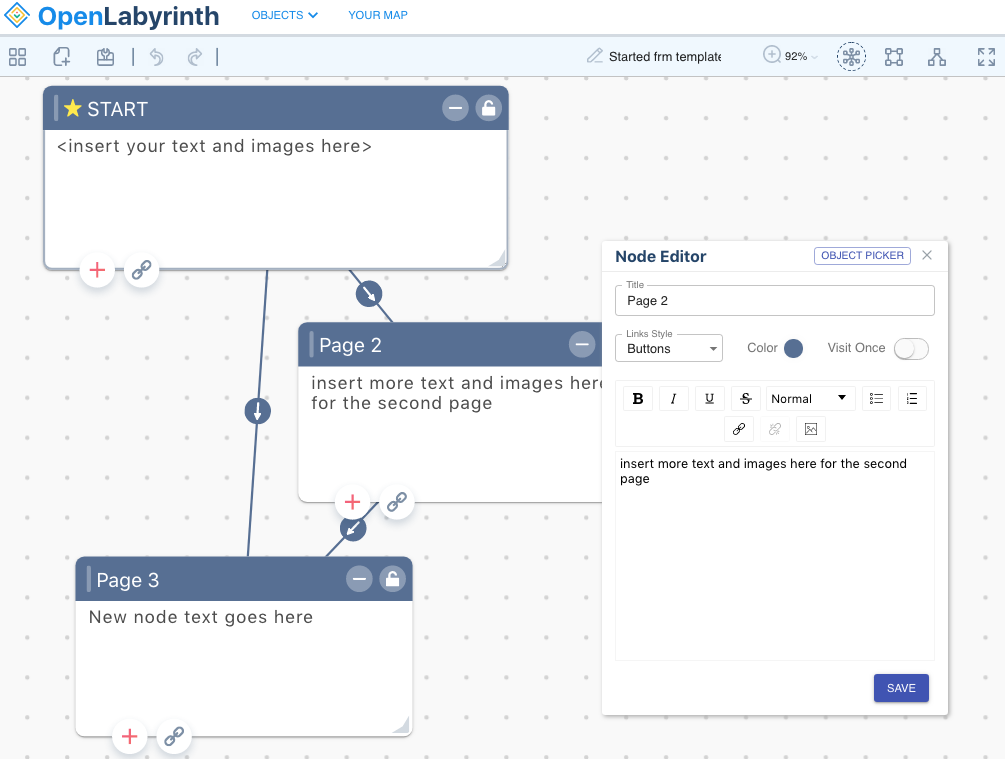

We have just reported on what arose as a result of this grant and, in general, we are pretty pleased with what came out of it and where things are headed. OpenLabyrinth continues to be used widely in the educational community and that reach is growing.

But how do we know?

This is more challenging to assess than you might think. Using standard lit search techniques, it is not hard to find journal articles that relate to the ongoing and innovative use of OpenLabyrinth. But that is only a small part of the impact. Now to give credit to OIPH and its reporting template, it is great to see that they want to know about the societal impacts, social media etc. This is something that we strongly agree with in OHMES.

But the actual measurement of such outputs is not so easy. An obvious way to do this would be via Altmetrics, which is revolutionizing how such projects and outputs are seen by the public. It has a powerful suite of tools that allows it to track mentions and reports in various public channels, social media channels, news items etc. Great stuff.

But Altmetric requires items to be assigned to either departments or institutes or faculties. For OHMES and OpenLabyrinth, this creates a significant problem. There is no ability to assign a tag or category which spans the range of groups and organizations that are involved. This is somewhat surprising, given that the general approach in Altmetrics is ontological, rather than taxonomical. (1,2)

In our PiHPES Project, partly as a result of such challenges, we are exploring two approaches to this. Firstly, we are using xAPI and the Learning Records Store (LRS) to directly track how our learners and teachers make use of the plethora of learning objects that we create in our LMSs and other platforms – the paradata, data about how things are used, accessed, distributed.

Secondly, we are looking for ways in which to make such activities and objects more discoverable, both through improved analytics on the generated activity metrics, and in tying the paradata and metadata together in more meaningful ontologies, using semantic linking.

- Shirky C. Ontology is Overrated — Categories, Links, and Tags [Internet]. 2005 [cited 2019 May 3]. Available from: http://www.shirky.com/writings/ontology_overrated.html

- Leu J. Taxonomy, ontology, folksonomies & SKOS. [Internet]. SlideShare. 2012 [cited 2019 May 3]. p. 21. Available from: https://www.slideshare.net/JanetLeu/taxonomy-ontology-folksonomies-skos