The Quality Referral Evolution (QuRE) project, www.ahs.ca/qure, is making good progress.

The QuRE Project started in Alberta, as an initiative to enhance the quality of clinical referrals and consults in healthcare. With 2.3 million referrals created every year in Alberta alone, the potential for improvements and savings is enormous. Now several other Canadian provinces, including Saskatchewan and British Columbia, are starting to engage in similar and collaborative efforts.

Earlier this year, the Office of Health & Medical Education Scholarship (OHMES) at the Cumming School of Medicine, University of Calgary, became involved with this project. The QuRE group had some tremendous work to establish an evidence-informed approach to quality referrals and consults. Some educational materials had been created and seminars given.

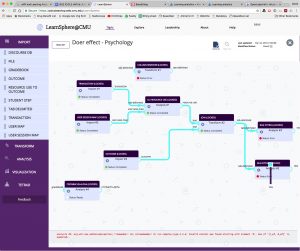

OHMES saw an opportunity to create a more interactive approach to the educational processes of the QuRE Project. Rather than simply telling healthcare learners what to do, we used OpenLabyrinth virtual scenarios and CURIOS video mashups, along with other educational tools such as GrassBlade, PowToons, to create more interactive materials, with built-in activity metrics and analytics.

This approach will enable us to continually modify our materials (its own quality evolution, as it were), based on learner performance, not just on yet more questionnaires.

What has particularly excited us at OHMES about this project is that it represents an opportunity to study how an educational intervention can have an impact on patient care. For years, especially in CME circles, there have been repeated calls for educational approaches that can actually demonstrate a change in how we provide care, and ultimately, on improved patient outcomes.

One particular aspect of this project is that we intend to longitudinally track, over several years, how feedback and these interventions may iteratively improve the quality of referrals and consults in participating groups. We will have sufficient data to demonstrate useful changes, thanks to the ongoing use of activity metrics, gathered from across multiple healthcare systems.