The Experience API (xAPI) provides OLab with some powerful tools to integrate activity metrics as a research tool. But, of course, there is more to it than just capturing and aggregating data.

Data visualization and learning analytics are increasingly important — this is one of the key pillars in our push towards Precision Education. Some of the Learning Record Stores (LRS) come with tools to assist with such analytics. We have spoken of this before: while we currently use GrassBlade as our workaday LRS because it is simple for small pilots, the beauty of the LRS approach is that data can easily be federated across other LRSs. For example, we have made use of the more powerful analytics provided by the Watershed LRS.

However, as we move into more detailed analytics, it is great to be able to work with even more powerful tools. We have just started working with the IEEE ICICLE group on looking at better approaches to such learning analytics. LearnSphere is one such tool, being extensively used at Carnegie Mellon University (but open-source, on Github).

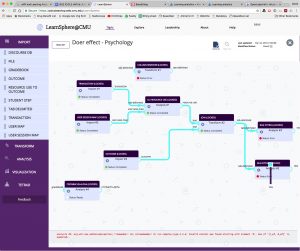

LearnSphere has a powerful set of analytics tools. In the screenshot above, it shows us using the Tigris learning workflows tool to map out good learning designs and scenarios, designed to answer questions such as “which kinds of learner activity are worth measuring?” — the datasets can be quite varied and the LearnSphere group is interested in accommodating a wider range of learning research datasets.

Today’s discussion of the IEEE ICICLE xAPI and Learning Analytics SIG focused on how to integrate xAPI activity streams in a more seamless manner with LearnSphere. We are pleased to be involved with such dataflow integration initiatives. As Koetinger et al (1) demonstrated in 2016, there is a clear link between “doing” and learning. This is not a new concept at all, but proving this has been remarkably difficult in the world of education, where there are so many confounding factors to consider in a study methodology. This approach, using learning analytics, is much more solid.

1. Koedinger, K. R., McLaughlin, E. A., Jia, J. Z., & Bier, N. L. (2016). Is the doer effect a causal relationship? In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge – LAK ’16 (pp. 388–397). New York, New York, USA: ACM Press. http://doi.org/10.1145/2883851.2883957